The Rise of “Track”—A Legal Loophole in Disguise

As of late 2024, fifteen U.S. states had enacted laws restricting or outright banning facial recognition technology. These legislative moves were meant to protect civil liberties and curb the growing concerns around accuracy and privacy. But a new AI-powered system named Track, developed by video analytics firm Veritone, has found a way around these bans—and it’s raising some serious eyebrows.

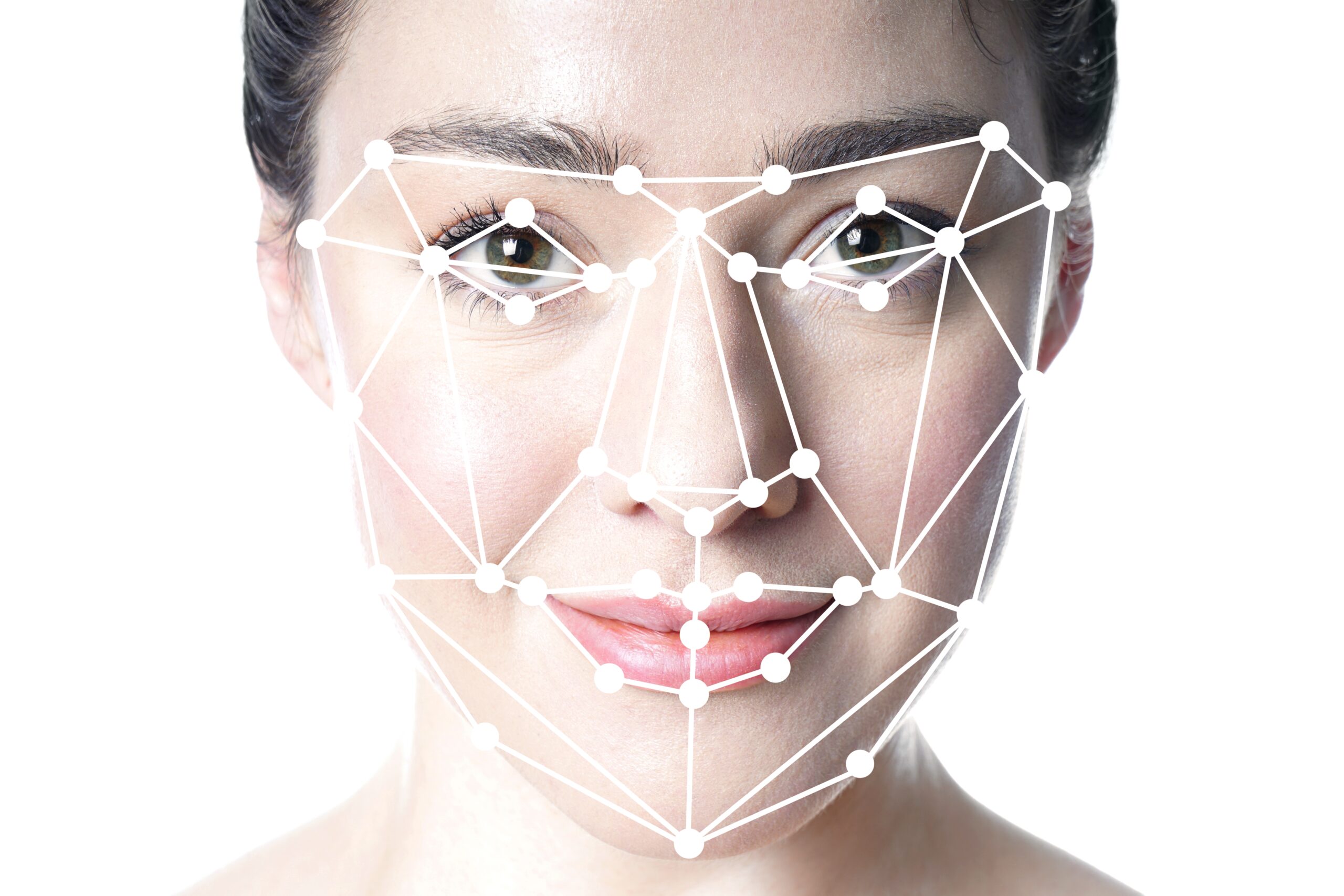

Instead of using facial data, Track bypasses biometric analysis entirely. By leveraging nonbiometric identifiers like body shape, clothing, hair, gender, and even accessories, this tool identifies individuals without scanning their faces. And in doing so, it manages to skirt around the legal frameworks that facial recognition laws aim to enforce.

How Does Track Work?

Track scans and analyzes footage from body cams, drones, security systems, social media videos, and more. Users can select identifying attributes from drop-down menus—categories like footwear, hair, upper/lower clothing, and even accessories like hats, glasses, or bags.

For example, if law enforcement is searching for someone wearing a blue hoodie and carrying a backpack, they can input those details into Track. The AI then sifts through hours of footage, returning image matches that fit those descriptors. Over time, investigators can triangulate a subject’s movement and behavior—all without ever seeing their face.

Privacy Concerns and Ethical Dilemmas

While Veritone calls it their “Jason Bourne tool” and touts its ability to exonerate the innocent, privacy advocates are ringing alarm bells. According to Nathan Wessler, a lawyer at the American Civil Liberties Union (ACLU), tools like Track create “a categorically new scale and nature of privacy invasion.”

Unlike facial recognition, which relies on immutable facial features, Track evaluates mutable characteristics—clothing, posture, and accessories—making it even easier to misidentify someone. It opens the door for false accusations, especially in marginalized communities already disproportionately affected by surveillance.

The Future of Surveillance Without Faces

Track is not just a technological workaround—it’s a policy dodge. By finding legal loopholes and exploiting them, this AI tool could pave the way for surveillance strategies that are harder to regulate and more prone to misuse. While it’s marketed as a solution to privacy laws, it might actually undermine them entirely, creating new risks without clear accountability.

As the debate around AI and privacy intensifies, Track is a case study in how innovation can blur the lines between legality and ethics. It may not use your face, but it’s watching you all the same.

📝 Source

Original article from Futurism.